The relationship between machine learning and control theory lies at the core of advanced industrial applications, including power plant and robot arm control, as well as discrete action planning for navigation or routing. While much of this theory has deep roots, recent advancements in deep reinforcement learning (RL) and automatic differentiation have unearthed new results that blur the lines between control theory and RL, in particular model-based RL.

This intensive one-day training bridges the gap between theoretical concepts and real-world applications, equipping participants with an understanding of the relationship of control theory with machine learning.

Starting with an introduction to classical control fundamentals, the course provides a solid foundation before moving on to optimal control methods. We investigate various ways in which problems can be formulated as control or planning tasks, providing a comprehensive perspective for attendees.

Through practical examples, participants will also gain experience in system identification and control using optimal control as well as machine learning approaches, including neural network modeling and dynamic mode decomposition (DMD).

Prerequisites

As a prerequisite for this training, participants should have basic knowledge of Control Theory. Therefore, we recommend our short online Classical Control training to the participants that have no previous background in Control Theory or those who wish to refresh their knowledge. In addition to that, the training’s hands-on tasks require basic knowledge of Python.

In essence, participants should be familiar with the following:

- Classical Control.

- State-Space representation.

- Linear Controller Design.

- Challenges of Classical Control.

- Python.

All topics, except for Python, are covered in the classical control training.

It is also beneficial, but not required, if participants are familiar with the following Python libraries:

Contents

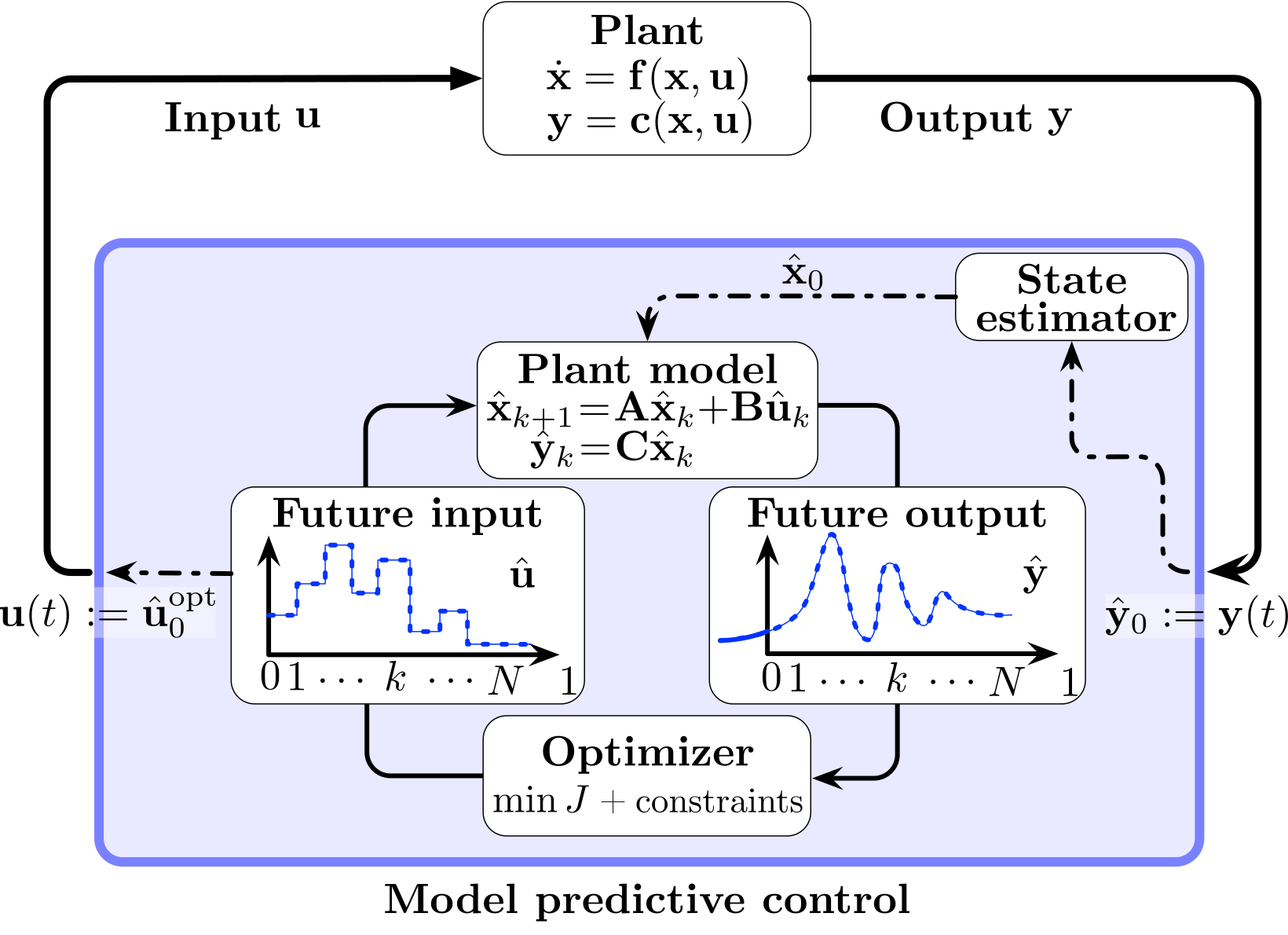

Model-Predictive Control (MPC) Block Diagram.

Reproduced from [Bru22M].

Model-Predictive Control (MPC) Block Diagram.

Reproduced from [Bru22M].We’ve published the full content of the training on GitHub.

Part 1: Optimal Control

In this part, we will introduce Optimal Control with a special focus on Model-Predictive Control (MPC). We will explain the theory behind it and variations thereof, and we will apply it to control an inverted pendulum system using both a linarized model and a non-linear model.

Part 2: Machine Learning in Control

In this part, we will show that, unlike typical Reinforcement Learning approaches which aim to replace the use of Control Theory, Machine Learning can instead be used to augment it and alleviate some of its shortcomings. More specifically, we will show how to learn a system’s dynamic model from data using different approaches including fitting a neural network. We will also address the issue of how to evaluate these models’ fit and then how to use them to determine the best control signal. We will also show more recent developments in this direction with the Koopman Operator and Dynamic Mode Decomposition (DMD).

Overview of Dynamic Mode Decomposition with Control (DMDc). Adapted from [Pro16D].

Learning outcomes

Upon completion of the training, participants will have learned about:

- Control theory and its different branches.

- The relationship between control and machine learning.

- Typical control problems.

- Basics of planning and decision-making: plans, environments, trajectories, rewards, and costs.

- Planning-based control.

- Learning-based control and system identification.