Many recent neural network-based methods in simulation-based inference (SBI) use normalizing flows as conditional density estimators. However, density estimation for SBI is not limited to normalizing flows—one could use any flexible density estimator. For example, continuous normalizing flows trained with flow matching have been introduced for SBI recently [Wil23F]. Given the success of score-based diffusion models [Son19G], it seems promising to apply them to SBI as well, which is the primary focus of the paper presented here [Sha22S].

Diffusion models for SBI

The idea of diffusion models is to learn sampling from a target distribution, here $p(\cdot \mid x)$, by gradually adding noise to samples $\theta_0 \sim p(\cdot \mid x)$ until they converge to a stationary distribution $\pi$ that is easy to sample, e.g., a standard Gaussian. On the way, one learns to systematically reverse this process of diffusing the data. Subsequently, it becomes possible to sample from the simple noise distribution and to gradually transform the noise sample back into a sample from the target distribution.

More formally, the forward noising process $(\theta_t)_{t \in [0, T]}$ can be defined as a stochastic differential equation (SDE)

$$ d\theta_t = f_t(\theta_t)dt + g_t(\theta_t)dw_t, $$

where $f_t: \mathbb{R}^d \to \mathbb{R}^d$ is the drift coefficient, $g_t: \mathbb{R}^d \to \mathbb{R} \times \mathbb{R}^d$ is the diffusion coefficient, and $w_t$ is a standard $\mathbb{R}^d$-valued Brownian motion.

Under mild conditions, the time-reversed process $(\bar{\theta}_t) := (\theta_{T-t})_{t \in [0, T]}$ is also a diffusion process [And82R], evolving according to

$$ d\bar{\theta}_t = [-f_{T-t}(\bar{\theta}_t) + g^2_{T-t}(\bar{\theta}_t)\nabla_{\theta}\log p_{T-t}(\bar{\theta}_t \mid x)]dt +g_{T-t}(\bar{\theta}_t)dw_t. $$

Given these two processes, one can diffuse a data point $\theta_0 \sim p(\cdot \mid x)$ into noise $\theta_T \sim \pi$ by running the forward noising process and reconstruct it as $\bar{\theta}_T \sim p_T(\cdot \mid x) = p(\cdot \mid x)$ using the time-reversed denoising process.

In the SBI setting, we do not have access to the scores of the true posterior, $\nabla_{\theta}\log p_t(\theta_t \mid x)$. However, we can approximate the scores using score-matching [Son21S].

Score-matching for SBI

One way to perform score-matching is training a time-varying score network $s_{\psi}(\theta_t, x, t) \approx \nabla_\theta \log p_t(\theta_t \mid x)$ to approximate the score of the perturbed posterior. This network can be optimized to match the unknown posterior by minimizing the conditional denoising posterior score matching objective given by

$$ \mathcal{J}^{DSM}_{post}(\psi) = \frac{1}{2}\int_0^T \lambda_t \mathbb{E}[||s_{\psi}(\theta_t, x, t) - \nabla_\theta \log p_{t|0}(\theta_t \mid \theta_0)||^2]dt. $$

Note that this objective does not require access to the actual score function of the posterior $\nabla_\theta \log p_t(\theta_t \mid x)$, but only to that of the transition density $p_{t|0}(\theta_t \mid \theta_0)$, which is defined by the forward noising process (see paper for details). The expectation is taken over $p_{t|0}(\theta_t \mid \theta_0) p(x \mid \theta_0) p(\theta_0)$, i.e., over samples from the forward noise process, samples from the likelihood (the simulator) and samples from the prior. Thus, the training routine for performing score-matching in the SBI setting amounts to

- Draw samples $\theta_0 \sim p(\theta)$ from the prior, simulate $x \sim p(x \theta_0)$ from the likelihood, and obtain $\theta_t \sim p_{t|0}(\theta_t \mid \theta_0)$ using the forward noising process.

- Use these samples to train the time-varying score network, minimizing a Monte Carlo estimate of the denoising score matching objective.

- Generate samples from the approximate score-matching posterior $\bar{\theta}_T \sim p(\theta \mid x_o)$ by sampling $\bar{\theta}_0 \sim \pi$ from the noise distribution and plugging $\nabla_\theta \log p_t(\theta_t \mid x_o) \approx s_{\psi}(\theta_t, x_o, t)$ into the reverse-time process to obtain $\bar{\theta}_T$.

The authors call their approach neural posterior score estimation (NPSE). In a similar vein, score-matching can be used to approximate the likelihood $p(x \mid \theta)$, resulting in neural likelihood score estimation (NLSE) (requiring additional sampling via MCMC or VI).

Sequential neural score estimation

Neural posterior estimation enables amortized inference: once trained, the conditional density estimator can be applied to various $x_o$ to obtain corresponding posterior approximations with a single forward pass through the network. In some scenarios, amortization is an excellent property. However, if simulators are computationally expensive and one is interested in only a particular observation $x_o$, sequential SBI methods can help to explore the parameter space more efficiently, obtaining a better posterior approximation with fewer simulations.

The idea of sequential SBI methods is to extend the inference over multiple rounds: In the first round, training data comes from the prior. In the subsequent rounds, a proposal distribution tailored to be informative about $x_o$ is used instead, e.g., the current posterior estimate. Because samples in those rounds do not come from the prior, the resulting posterior will not be the desired posterior but the proposal posterior. Several variants of sequential neural posterior estimation have been proposed, each with its own strategy for correcting this mismatch to recover the actual posterior (see [Lue21B] for an overview).

[Sha22S] present score-matching variants for both sequential NPE (similar to the one proposed in [Gre19A]) and for sequential NLE.

Empirical results

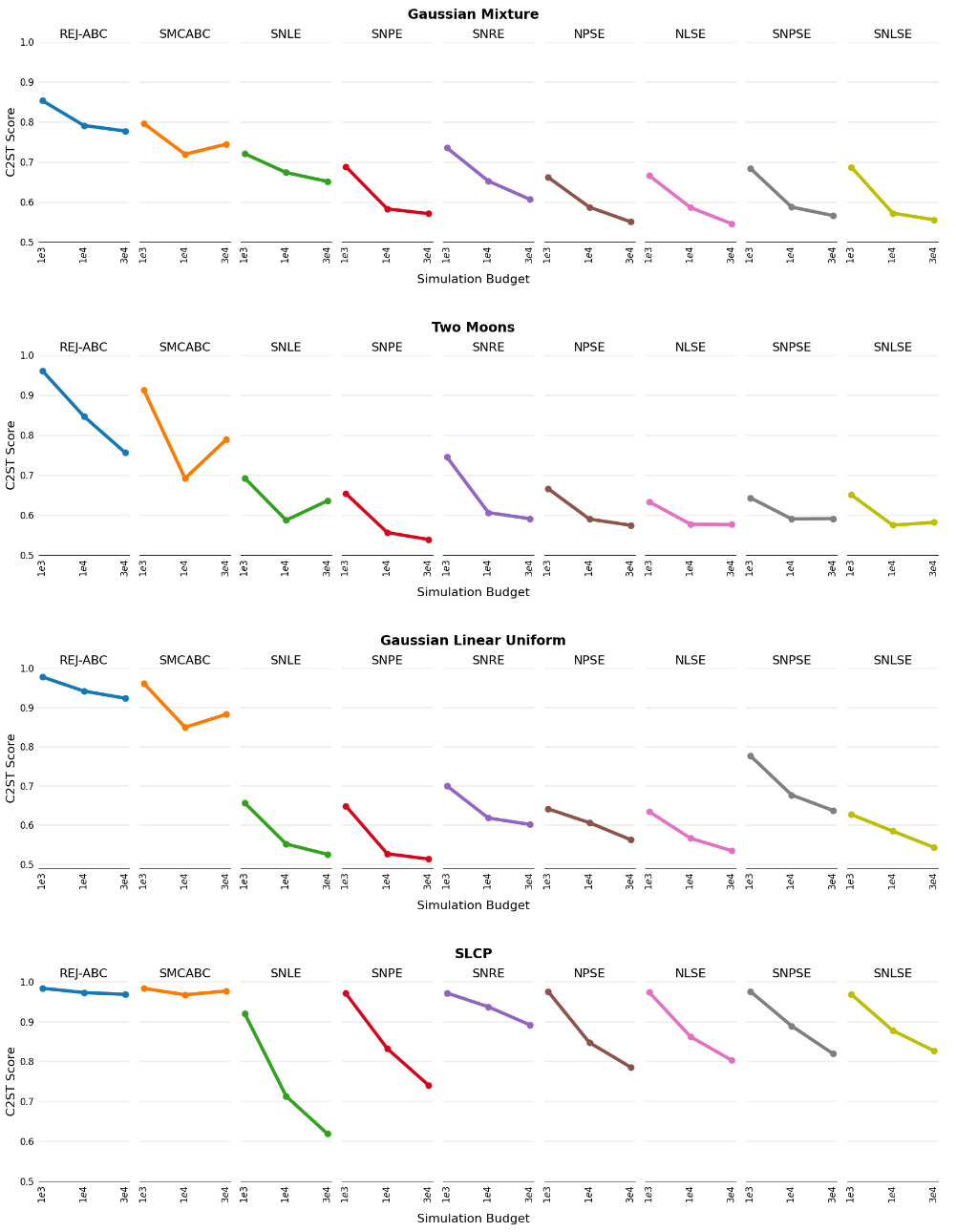

Figure 1. [Sha22S], Figure 2. Posterior accuracy of various SBI methods on four benchmarking tasks. Measured in two-sample classification test accuracy (C2ST, 0.5 is best).

The authors evaluate their approach on a set of four SBI benchmarking tasks [Lue21B]. They find that score-based methods for SBI perform on par with and, in some cases, better than existing flow-based SBI methods (Figure 1).

Limitations

With score-based diffusion models, this paper presented a potent conditional density estimator for SBI. It demonstrated similar performance to existing SBI methods on a subset of benchmarking tasks, particularly when simulation budgets were low, such as in the two moons task. However, the authors did not extend their evaluation to real-world SBI problems, which are typically more high-dimensional and complex than the benchmarking tasks.

It is important to note that diffusion models can be more computationally intensive during inference time than existing methods. For instance, while normalizing flows can be sampled and evaluated with a single forward pass through the neural network, diffusion models necessitate solving an SDE to obtain samples or log probabilities from the posterior. Therefore, akin to flow-matching methods for SBI, score-matching methods represent promising new tools for SBI, but they imply a trade-off at inference time that will depend on the specific problem.